In the future, robots and humans will need to be able to work together on tasks in a variety of ranging from industrial robotics to elder care. Part of these tasks is transfer of objects between humans and robots; a human might need a particular tool, or a robot might be tasked with putting away some part. We recently looked at human-to-robot handover, in particular in how we could allow for fluid, safe human-to-robot handovers where the human might be holding an object in many different ways, and the object itself might not be clearly visible.

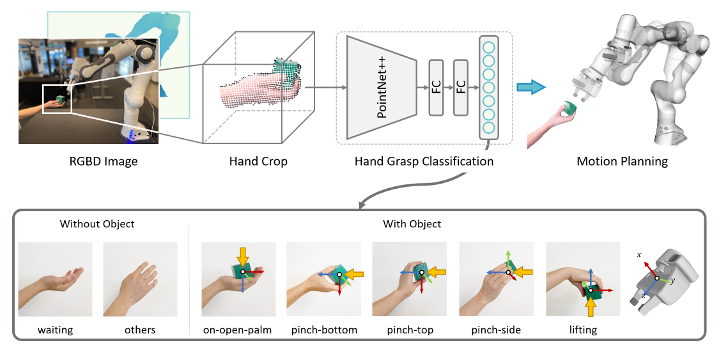

We implemented a classification model based on Pointnet++, which determines which of several types of grasps a human is using. This was used to determine how best to approach the human hand. We had the robot replan dynamically if the human moved or if their grasp ended up changing. The task model was based on our prior work on Robust Logical-Dynamical Systems, presented at IROS 2019 in Macau.

Above, you can see an overview of the system we proposed. To read more you can check out my co-author’s site or the paper on ArXiV. Our work was also covered in a couple news articles:

- NVIDIA: NVIDIA Researchers Use AI to Teach Robots How to Improve Human-to-Robot Interactions

- VentureBeat: Nvidia researchers use AI to teach robots how to hand objects to humans

- AIM: ‘Human-To-Robots’ Handover Problem Seems To Be Solved

Our work will be presented at IROS 2020.