CoSTAR is the Collaborative System for Task Automation and Recognition, a framework that contains tools for authoring task plans and “teaching” robots how to perform complex behaviors that may optionally involve working alongside humans.

We implemented our system on a KUKA LBR iiwa 14, courtesy of the KUKA Innovation Award competition, with a Robotiq S Model adaptive gripper. We attached an AR marker to the wrist and used CamOdoCal to compute the transform from the end to the wrist, which allows us to quickly move an externally-mounted camera and reconfigure our workspace to best suit different tasks.

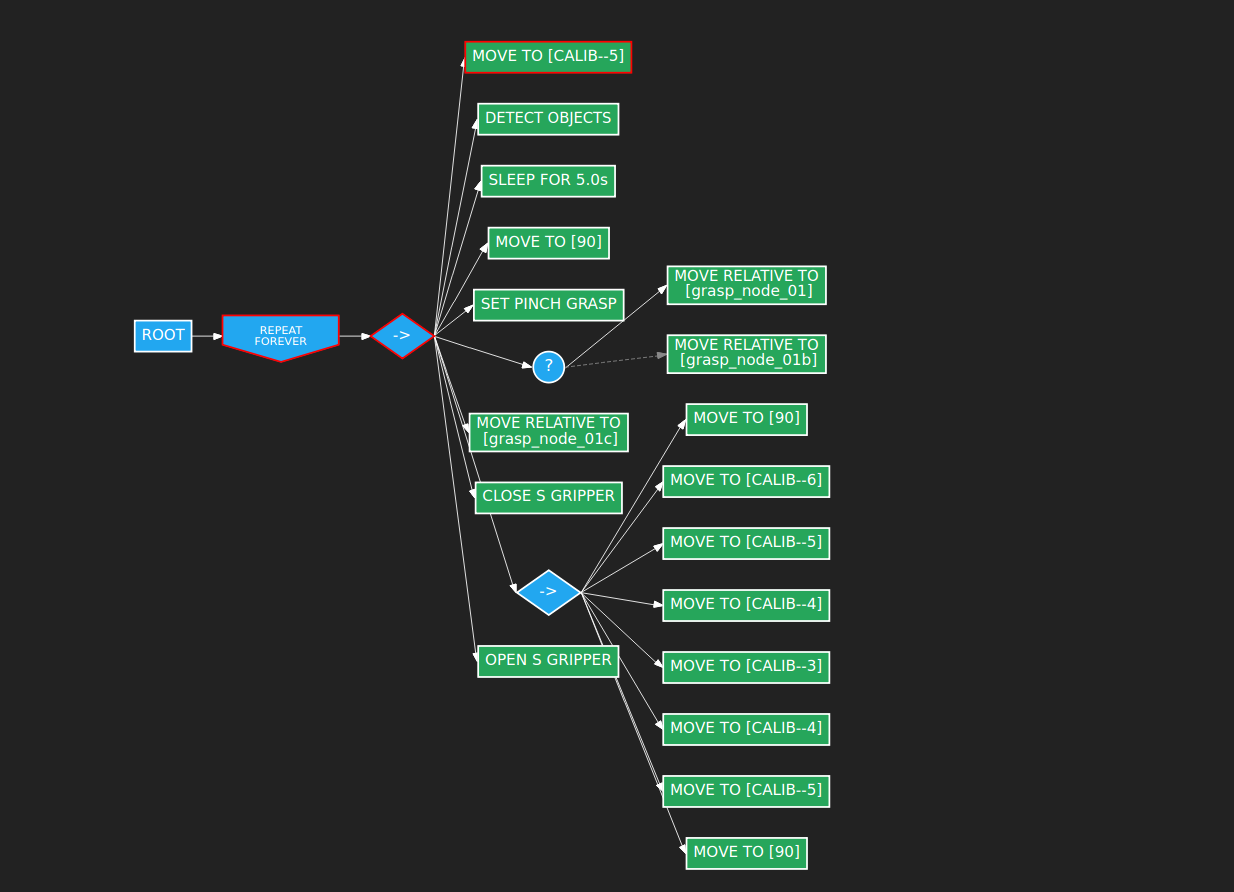

The CoSTAR interface is based on Behavior Trees. Behavior trees have been used in robotics and in video games; they were initially designed to model complex systems. From our point of view, the main advantage of behavior trees is that they simplify authoring of tasks: the order in which each leaf node is executed is determined by the logic nodes above it. This means that we can easily compose trees and we can just drop one tree or node into another tree and expect everything to work.

For example, here is a behavior tree that picks up a node and moves it around through a preset pattern:

The combination of software and user interface lets us teach positions and store them relative to different types of objects. We can, for example, teach the robot how to manipulate components of a structure, or teach it how to grab a sander.

This video shows an example of how we can use CoSTAR to teach a simple sanding task:

Executing that sanding task: