The goal of our “visual task planning” is to simulate, at a very high level, the task-relevant details associated with the performance of a particular task. This will discuss some results from CoSTAR Plan, and how we are bringing VTP to real robots.

Once we can imagine the next few high-level actions, we should be able to use our imagination to plan:

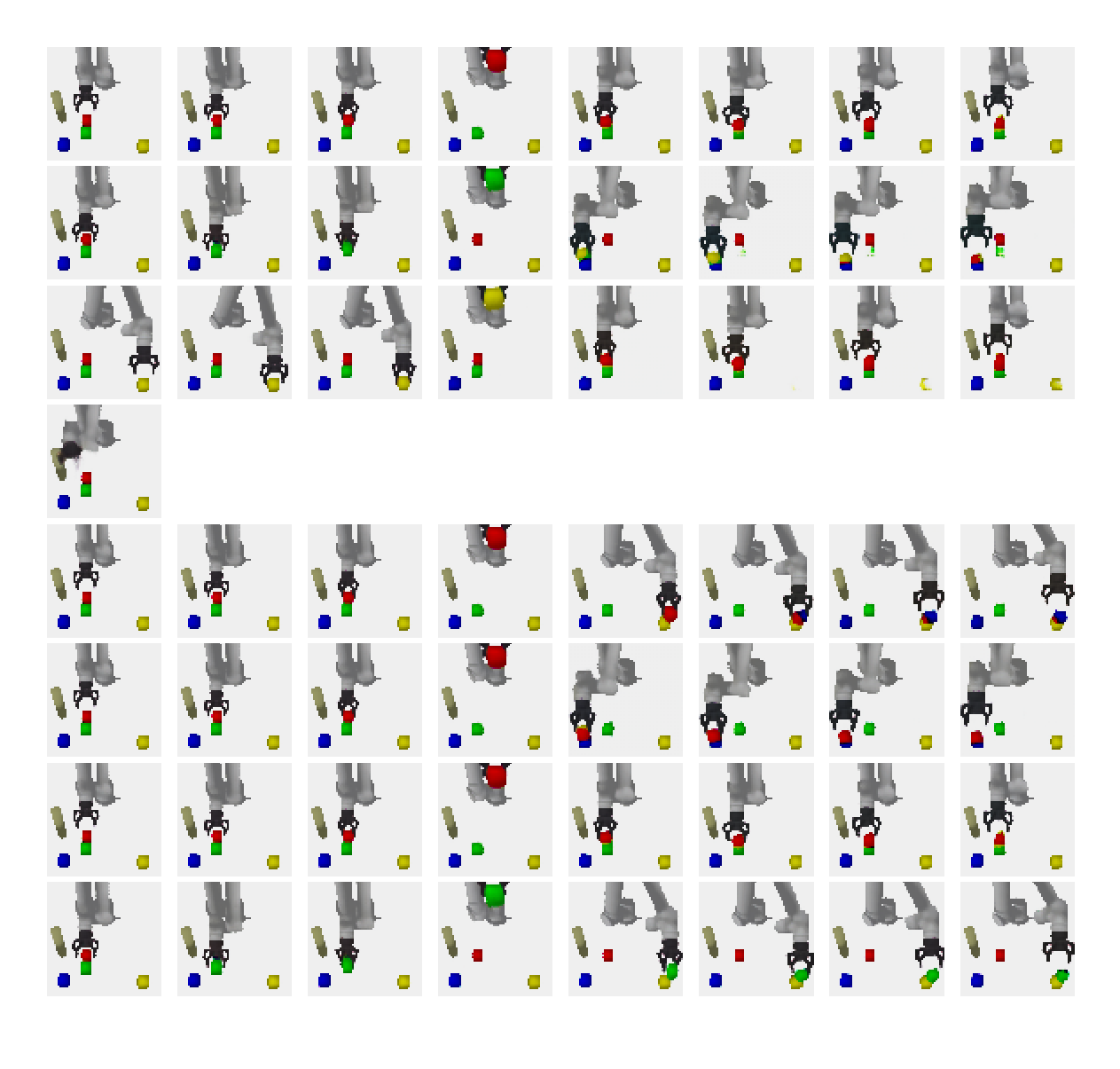

Above is an example of what this looks like in practice. Each row is a separate “rollout”, or simulation of what might happen in a particular world. In the fourth row, we see a failure – the robot predicts that if it tries to grasp the blue block, it will run into an obstacle.

Note that for this task, we do not actually care which block gets stacked on which object – so note that the method will occasionally lose track of some of these details further out into the future (see rows 2 and 3, for example). These are still good results – they show exactly what the robot intends to do, and how it will do this. You can check out the simulation dataset for yourself.

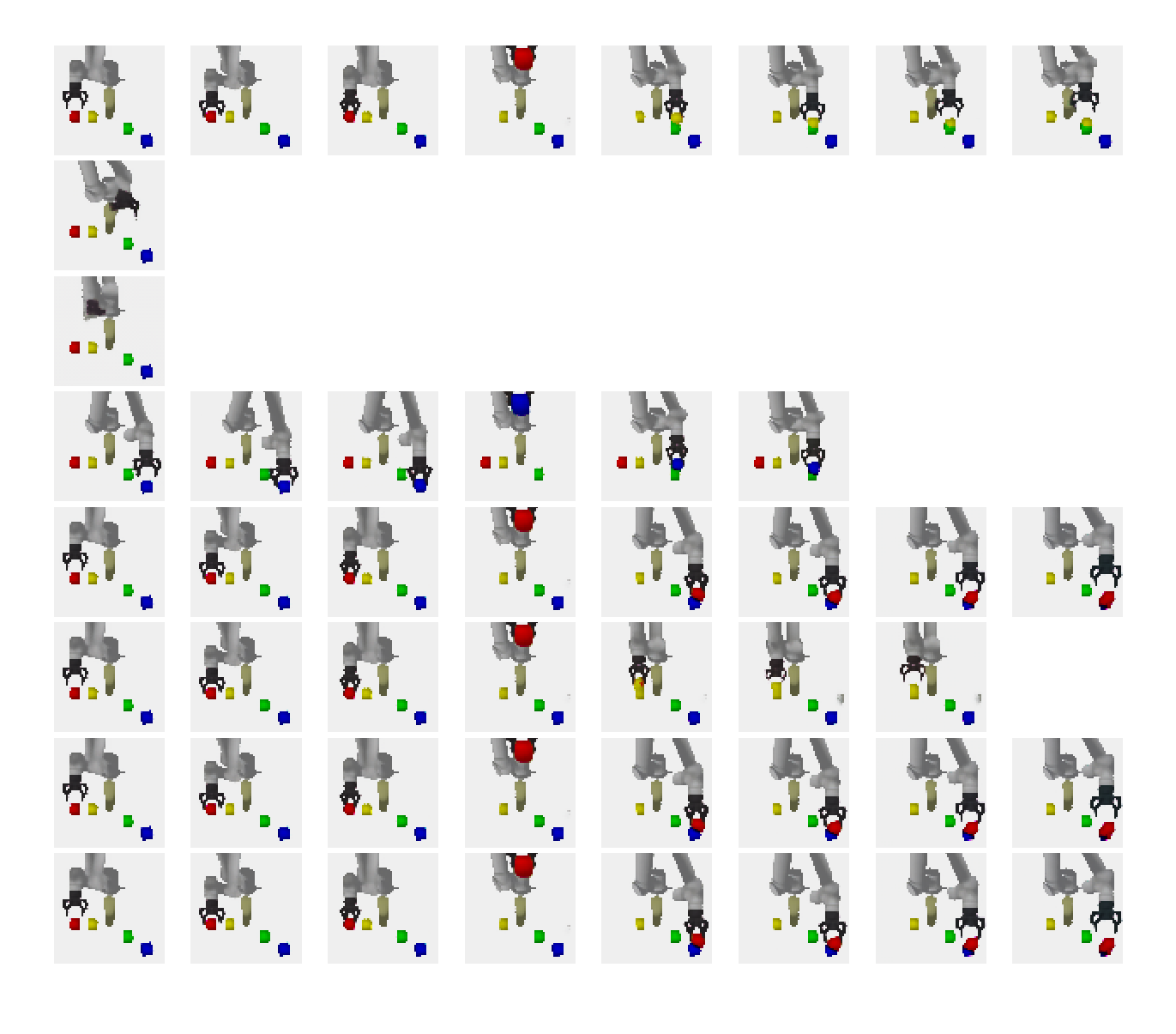

This method can predict some very interesting failures.

In the 2nd and 3rd rows of the image above, we see predictable failures: the system knows that if it tries to grab the green or yellow blocks it will hit the obstacle. In the fifth row we see an intersting failure, though: it realizes that when it opens the gripper, the jaws of the gripper will swing back and hit the obstacle, causing another less obvious failure!

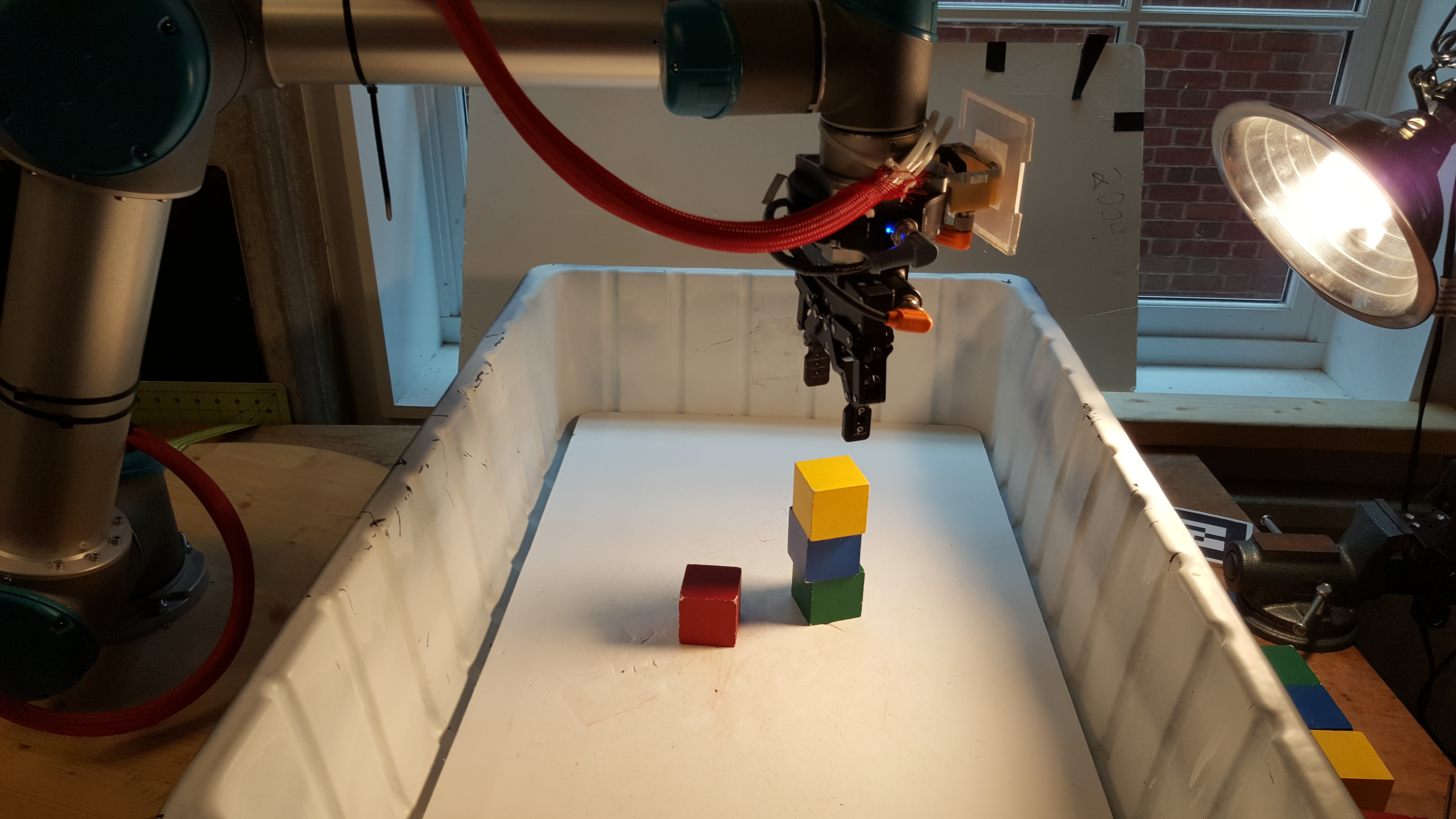

Recently, we have begun collecting data for our visual robot task planning project so that we can test it in the “real world.” This involves a lot of challenges we did not have to deal with before.

Our setup is a Universal Robots UR5, a common collaborative industrial robot. We use the open source UR Modern Driver to communicate with the robot as a part of the Robot Operating System (ROS).

We set up a procedure where the robot will randomly build a stack, then unstack the blocks, using our CoSTAR tool. You can see a video below.

The paper is available on ArXiv: Visual Robot Task Planning.